Investigative journalists have been among the earliest adopters of artificial intelligence in the newsroom, and pioneered some of its most compelling — and award-winning — applications. In this first part of a draft book chapter, I look at the different branches of AI and how they’ve been used in a range of investigations.

Investigative journalism’s close relationship with data journalism and open source intelligence (OSINT) provides fertile ground for experimentation with AI, and while the explosion of generative AI has opened up further territory for innovation, investigative journalism’s use of AI technology has so far been largely focused elsewhere.

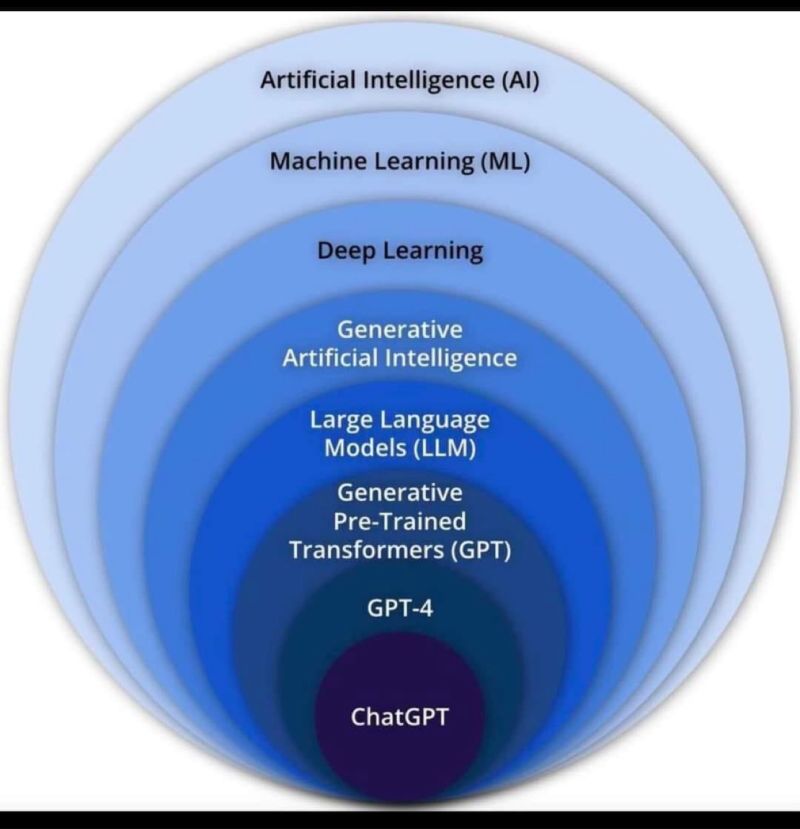

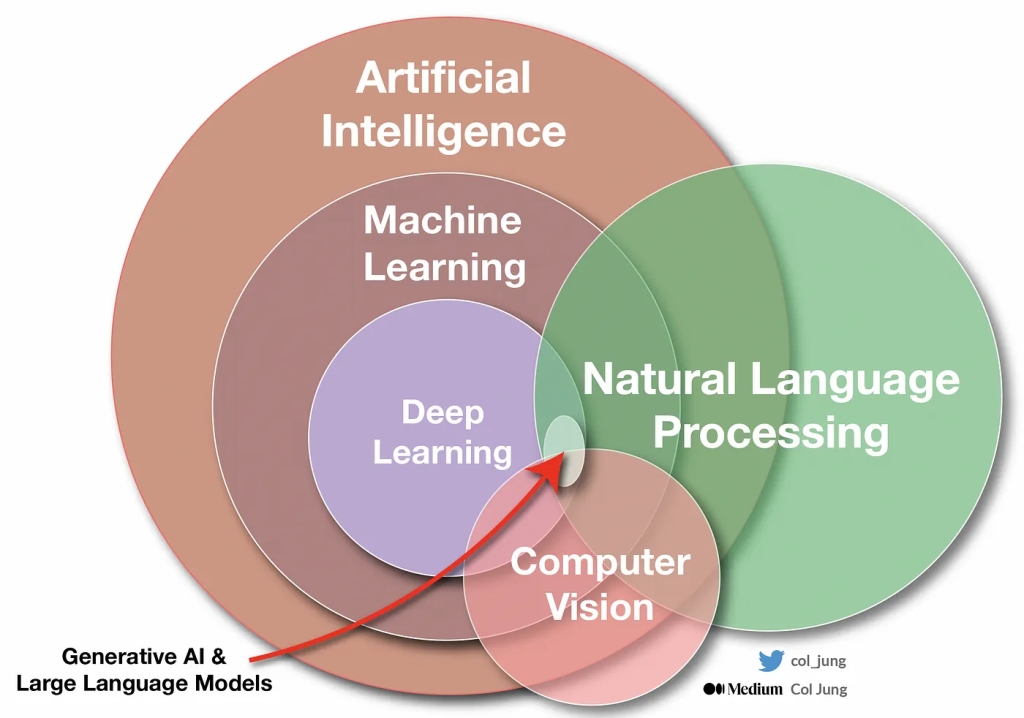

Although there is no widely accepted definition of the term (Wang, 2019; Russell, Norvig & Chang, 2022), within journalism “artificial intelligence” has been used to refer to a range of technologies whose functions range from classifying documents to the generation of video or images. But the technology has many branches, often with their own applications and challenges.

Tools such as ChatGPT and Google’s Gemini, for example, use a form of AI known as large language models (LLMs). These are part of the wider field of generative AI which includes image generation tools such as DALL-E and Midjourney, video generation tools such as OpenAI’s Sora and audio tools including Meta’s AudioCraft.

These models are trained on large datasets of images, video or audio, to build media by essentially predicting each word, pixel or sound as it writes, ‘draws’ or composes. That prediction is what gives an appearance of intelligence — but it doesn’t mean that the result is always going to be factually correct. Factual inaccuracies are such a recurring problem that a specific term has been coined to describe them: ‘hallucinations’.

Generative AI, in turn, is part of the branch of AI known as ‘deep learning’, which is itself a branch of the wider field of machine learning.

Machine learning involves training an algorithm to predict, classify, or cluster inputs into associated groups. By 2018 this form of AI was already being used by three quarters of ‘digital leaders’ in one survey for purposes ranging from content recommendation to fact-checking, and within investigative journalism specifically, by 2024 machine learning was being used by two of the 15 winners of the Pulitzer Prize.

The two most common ways to train an algorithm are known as supervised and unsupervised learning.

Unsupervised learning involves letting the algorithm cluster data into any patterns it identifies, and it needs very little information about that data — which makes it a powerful way of identifying groups of related documents or words, for example.

Supervised learning, in contrast, requires training data which has been labelled in some way. This makes it more useful for classifying new data (based on how the training data was labelled) or making predictions (based on relationships it identifies between differently labelled data).

The use of AI in investigative journalism

Artificial intelligence’s wide range of applications have been used in all stages of journalism, from idea generation and alerts, to story research, production, publication, distribution, feedback and archiving (Hanson, Roca-Sales, Keegan and King 2017; Gibbs 2024). But within investigative journalism specifically, it has applications in at least nine scenarios. These include:

- Establishing a problem — or its scale

- Finding the needles in the haystack: reducing the scale of an editorial project

- Modelling and predictions

- Algorithmic accountability: unmasking a system

- Text-as-data: natural language processing

- Extraction, matching and cleaning

- AI in the sky: satellite and other imagery

- Sensors and acoustic-based machine learning

- New storytelling forms and outputs

Establishing a problem — or its scale

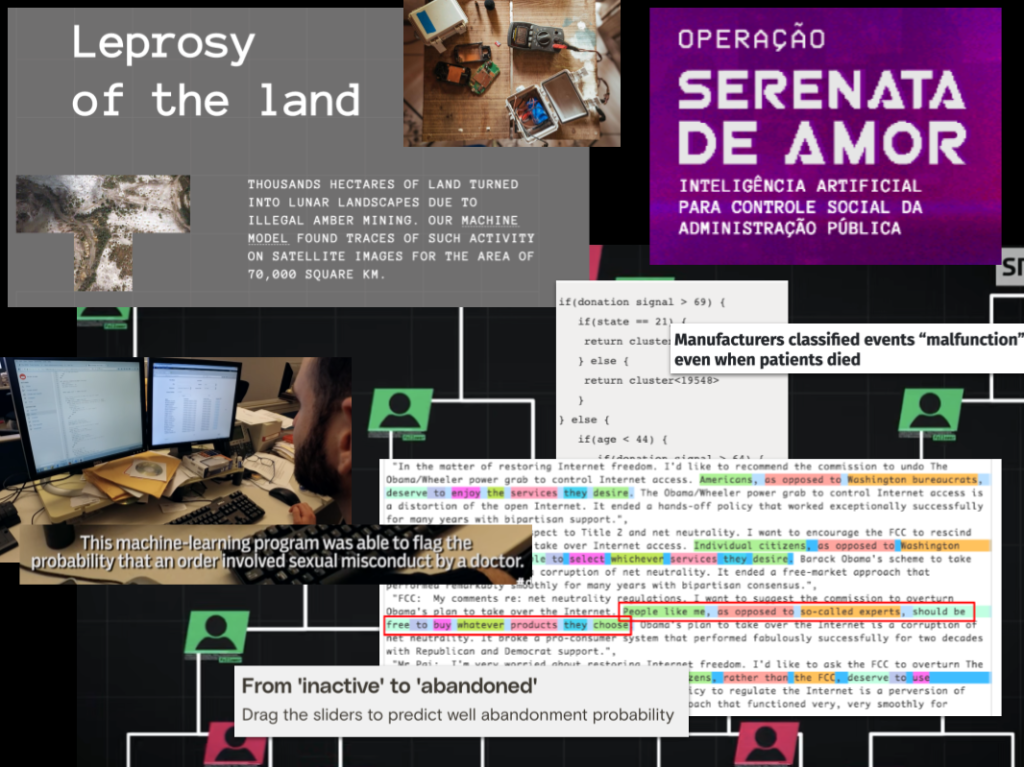

When the data journalism team at Swiss broadcaster SRF decided to investigate the use of fake followers by social media influencers, they turned to machine learning. The team created a dataset of Instagram accounts which they had already classified as either fake or real, and then used this to train an algorithm that could be applied to millions of followers of Swiss influencers.

The results established that the practice of buying fake followers was rife in the industry — and, for the first time, the scale of the problem (almost one in three followers). It also provided evidence to confront the influencers themselves on camera, whose responses were as telling as the data itself.

A year earlier BuzzFeed had used the same techniques to establish just how widespread ‘spy planes’ were in US airspace and how they behaved — including the fact that they seemed to be circling around mosques and areas with large Muslim populations. The team had already reported on individual instances of spy plans, and machine learning provided a way to expand that.

Reducing a challenge to human scale

A better description of machine learning’s potential for investigations, then, might be as a filtering tool. When the Atlanta Journal-Constitution wanted to establish the scale of doctors being allowed to continue to practise after being found guilty of sexual misconduct, for example, it used machine learning to reduce a set of 100,000 documents to around 6,000 that were likely to relate to that offence.

Reporter Danny Robbins had already established that in two-thirds of cases in Georgia alone, “doctors either didn’t lose their licenses or were reinstated after being sanctioned”, but public records requests had led to a dead end when it turned out most bodies didn’t record the vital information they needed.

So the data journalism team wrote a script to gather over 100,000 disciplinary documents. Machine learning was used to train an algorithm to identify documents related to sexual misconduct, and filter that unmanageable dataset to the much smaller subset that could then be manually checked and classified.

A similar process was followed by the International Consortium of Investigative Journalists in their investigation into medical device harm. In this case millions of records obtained through public records requests were screened by an algorithm trained to “identify reports in which the description of an adverse event indicated that a patient had died, but the death was misclassified as malfunctions or injuries”. The results were checked manually by a team of journalists.

Training an algorithm to identify misclassified events was also the basis for the LA Times investigation LAPD underreported serious assaults, skewing crime stats for 8 years.

While most ‘needle in the haystack’ scenarios involve one-off projects or megaleaks, factchecking presents a longer timescale for the development of AI within watchdog reporting.

A review of attempts to automate factchecking finds that “existing methods are yet far from matching human performance due to the profoundly challenging nature of the issue”, but the tools do not have to match human performance to be useful: one tool developed by Madrid-based media company Newtral to identify claims on social media that need checking was estimated to result in 80% time savings on monitoring tweets.

It is the next stage in the process which is problematic: determining whether a statement is factual or not — or indeed, whether a statement might be factual, but misleading (for example by not including vital context). Not only are many large language models trained on out-of-date data of varying quality, but, as Andy Dudfield, head of AI at one UK factchecking organisation, says, they: “don’t know what facts are. [It] is a very subtle world of context and caveats”.

Predictions and modelling

The potential scale or location of future problems can also be established by using machine learning’s ability to predict future events.

In the award-winning Grist and Texas Observer series Waves of Abandonment reporters had compiled a wide-ranging database on oil in Texas — covering everything from drilling history, depth and location, to oil prices, employment and macroeconomic indicators — and saw an opportunity to use it to identify potential problems. Senior data reporter Clayton Aldern says:

“At one point we asked, could we use this to figure out the future? This was a classification problem: which wells might be abandoned in the next couple years. And it’s a perfect question for machine learning.”

The model allowed the reporters to write a story about the potential cost to taxpayers of the coming problem. Historically this type of story would have relied on academics deciding to identify the same issue and conducting analysis over many years — but machine learning made it possible for reporters to take the initiative over a shorter timescale.

Modelling was also behind a story on repossessions by Eye on Ohio: data journalist Lucia Walinchus wanted to find out what made banks more likely to repossess some homes than others, making it possible to identify which factors played a role:

“In absolute numbers, parcels going to the land bank in African-American neighborhoods far outnumbered those in school districts where most students are white … But keeping the amount owed and the location to high value properties constant, the model predicted a tax-delinquent property in a majority-white district would be chosen over a minority one.”

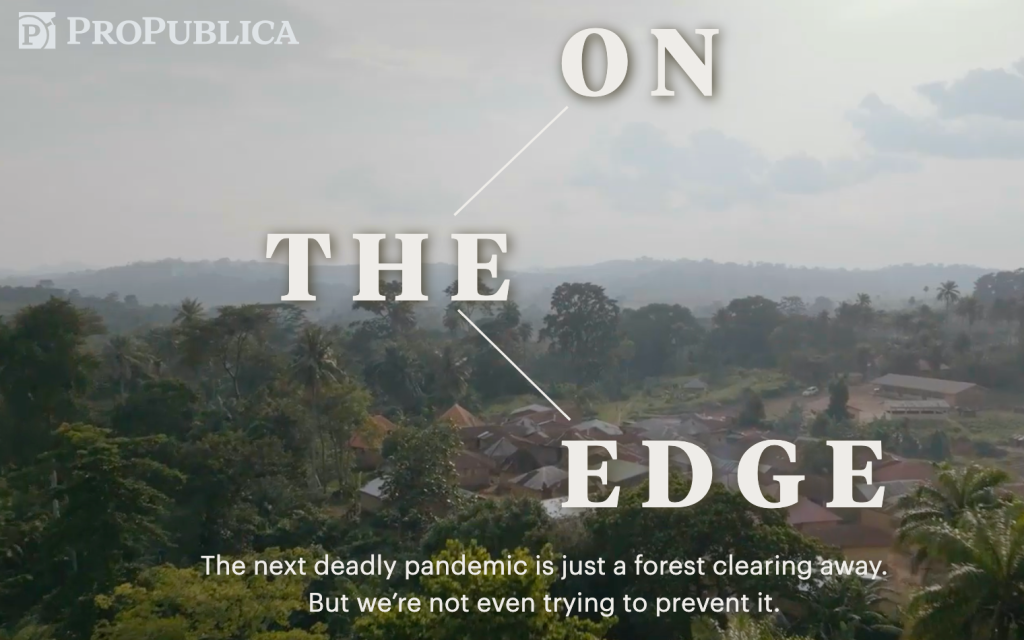

And when ProPublica wanted to find out what caused Ebola outbreaks in order to ask whether governments were doing enough, it turned to machine learning models too, collaborating with scientists to identify common ecological factors in the outbreaks. The model was able to identify deforestation as a major risk factor, and highlight Nigeria as being particularly at risk.

Algorithmic accountability

ProPublica has been a leader in the use of machine learning, employing it as early as 2012 to reverse-engineer political microtargeting. The team built a dataset of 30,000 political emails along with the demographics of the recipients, then used machine learning to understand how messages were being algorithmically tailored for different recipients.

This process of using algorithms to shine a spotlight on algorithms and hold them to account has become known as “algorithmic accountability” reporting. In his 2014 report on the field, Nicholas Diakopoulos explains the rise of this new branch of watchdog journalism:

“Algorithms, driven by vast troves of data, are the new power brokers in society … [A]lgorithmic power isn’t necessarily detrimental to people; it can also act as a positive force … What we generally lack as a public is clarity about how algorithms exercise their power over us. With that clarity comes an increased ability to publicly debate and dialogue the merits of any particular algorithmic power.”

ProPublica’s series ‘Machine Bias’ is one of the longest-running and most high-profile examples of algorithmic accountability reporting: between 2015 and 2022 it has investigated biases in software used to inform criminal sentencing, uncovered discrimination in Facebook advertising tools and in auto insurance premium calculators, and revealed Amazon’s tendency to prioritise its own products over cheaper alternatives.

Also established in 2015 was the European ‘AI forensics and reverse engineering task force’ Tracking Exposed, which focused specifically on the algorithms used by content platforms. In 2023 the project was succeeded by AI Forensics, with a new focus on election integrity and generative AI.

Elsewhere the collaborative journalism project Lighthouse Reports used similar techniques to investigate algorithmic profiling used by Dutch local government, compared by the United Nations Special Rapporteur on extreme poverty and human rights, to:

“The digital equivalent of fraud inspectors knocking on every door in a certain area and looking at every person’s records in an attempt to identify cases of fraud, while no such scrutiny is applied to those living in better off areas.”

Algorithmic accountability reporting does not require the use of AI algorithms: German public broadcaster Bayerischer Rundfunk (BR)’s report on ‘Black Box Reporting’ (PDF) identifies four approaches to investigating AI, including the use of access to information laws, analysing the outputs of automated systems, data analysis, and using interviews and documents.

This last approach is used throughout the award-winning ‘Working for an Algorithm’ series by nonprofit publication The Markup including stories such as ‘What Happens When Nurses Are Hired Like Ubers’ and ‘Secretive Algorithm Will Now Determine Uber Driver Pay in Many Cities’.

Investigating text-as-data

One branch of AI which often uses machine learning is Natural Language Processing (NLP), a technology that allows computers to appear to understand language. As well as facilitating translation, summarisation and optical character recognition (OCR) — which extracts text from images such as scanned PDFs — NLP includes a number of techniques that have applications within investigative journalism.

Sentiment analysis, for example, is a branch of NLP used to classify textual material as likely to be positive, negative, or neutral. A Washington Post investigation into audits of an international development agency used this technique to compare language that was removed from agency audits before publication, identifying that “more than 400 negative references were removed from the audits between the draft and final versions.”

Topic modeling is an NLP technique using unsupervised learning to take a text-based dataset and classify it into a specified number of ‘clusters’ based on shared language. This technique was used by Jeff Kao to identify suspicious patterns within millions of submissions to a public consultation on net neutrality, providing evidence of an automated disinformation campaign, and also by Associated Press journalists to identify accidents in schools involving law enforcement officers and educators’ firearms, from 140,000 incident reports.

A more widely used application of NLP in investigations is named entity recognition — or entity extraction. Journalists dealing with large document caches or leaks use this technology to generate lists of the people, places, organisations and key concepts within documents, and more quickly navigate between mentions of a target entity, saving large amounts of time in an investigation.

Extraction, matching and cleaning at scale

AI’s ability to extract data from documents is another attraction for investigative journalists. A common example is the challenge of unlocking data published in PDFs to construct a knowledge base (“knowledge base construction”): an obstacle to investigations which has typically required large amounts of time and manpower to tackle. Google products Cloud Document AI and Pinpoint make it possible to automate the extraction of structured data from datasets in PDFs — but there are challenges.

As with most AI products, the models are not 100% accurate, requiring some degree of checking which can make it just as time-consuming as the manual alternative.

Jonathan Stray, whose Deepform project used a subset of machine learning called deep learning to extract information from PDFs disclosing political advertising spending on TV and cable, describes the challenge:

“[It] successfully extracted the easiest of the fields (total amount) at 90% accuracy using a relatively simple network. [This] is a good result, [but] it’s probably not high enough for production use.”

Stray also outlines the challenges of off-the-shelf AI tools which:

“Either require manual interaction to extract data (so they can’t be used on bulk documents) or they use a template to extract data from a single type of form (which necessitates setup work for each type.) They don’t do well when the forms are heterogeneous, even if they all contain the same type of data … We are beginning to see more flexible AI-driven form extraction products that can learn form layouts, such as Rossum.ai. But this sort of technology is proprietary and often specialized to specific types of common business documents”

Another example of AI’s potential to assist with constructing a knowledge base is helping to match two separate datasets. One investigation into property tax evasion, for example, used Locality Sensitive Hashing (LSH) — a branch of unsupervised machine learning which groups together similar records into ‘buckets’ — to match property data with utilities data. Again, this method is not 100% accurate, and will result in both false negatives (matches being missed) and false positives (matches being made which are incorrect), so configuring the algorithm to be more particular, and/or to use more fields from the data, is an important step.

Cleaning data in order to match information from different sources is also the focus of DataMade’s Entity-Focused Data System project, built in partnership with the Atlanta Journal-Constitution. The project helps journalists and researchers to connect people and organisations even when they are named slightly differently in different datasets by addressing using a branch of natural language processing (NLP) called “probabilistic parsing”. This uses statistical methods to ‘parse’ a name into forename, surname and title, for example; or an address into city, zip code, street name and number.

Similar parsing and cleaning has been done with generative AI tools, although it has been noted that accuracy continues to be a challenge.

AI in the sky: satellite journalism

A particular use for machine learning has been found in the growing field of satellite journalism: stories about illegal mining operations, human rights violations, sanctions breaches and war crimes have all drawn on this application of artificial intelligence to find the ‘needle in the haystack’.

In these cases a separate branch of machine learning is involved: object detection. This involves training a machine learning algorithm to identify objects within images. In 2023, for example, the New York Times visual investigations team used this technique to look for evidence of the use of 2,000-pound bombs by Israel in southern Gaza. An object detection tool was trained to identify the craters created by the bombs, so that potential matches could then be reviewed by journalists, who removed false positives before confirming “hundreds of those bombs were dropped … particularly in areas that had been marked as safe for civilians … it’s likely that more of these bombs were used than what was captured in our reporting.”

Sensors and acoustic-based machine learning

Compared to text and images, audio is a relatively under-explored source for artificial intelligence in investigative journalism. Environmental nonprofit Rainforest Connection, however, has pioneered the use of machine learning with acoustic monitoring in remote areas to detect illegal logging. The approach relies on cheap sensors and has evolved from detecting chainsaws to: “detecting species, gunshots, voices, things that are more subtle”. The same techniques have been used by the group to measure a number of different impacts of climate change — and even track infectious disease risk.

Audio material can be used to train a machine learning algorithm in the same way as text or media, opening up the potential for using AI to trawl through audio recordings to identify a particular speaker, or pick out certain categories of event. At Spanish language media group PRISA these techniques were used to create VerificAudio, an experimental tool to detect audio deepfakes.

New forms and sources of engagement

While AI’s most obvious uses may relate to handling large amounts of information, it has also made it possible to explore new ways of telling and distributing that information journalistically. The Brazilian watchdog project Operation Serenata de Amor (serenata.ai), for example, not only demonstrates the potential of machine learning for monitoring politicians’ expenses (it is an algorithm designed to identify suspicious expense claims), but by linking it to an automated Twitter account, @RosieDaSerenata, the journalists attempt to scale up the process of involving the audience and seeking a response from the politician involved.

The account — which operated until 2022 — tweeted details when the algorithm identified claims it identified as warranting further scrutiny, inviting the politician involved to explain further. One of the journalists involved in the project said:

“We are living in a time in which congresspeople argue with robots on Twitter. We made democracy more accessible. People can ask for information about public expenditures straight to the politician and get the answer in a tweet.”

The translation and summarisation functionality offered by NLP can also play a role in making stories accessible to new audiences by creating tools to inform and empower them: one website and browser extension called Polisis (created by researchers at the Federal Institute of Technology at Lausanne, the University of Wisconsin and the University of Michigan) offers to help audiences understand privacy policies:

“Polisis can read a privacy policy it’s never seen before and extract a readable summary, displayed in a graphic flow chart, of what kind of data a service collects, where that data could be sent, and whether a user can opt out of that collection or sharing. Polisis’ creators have also built a chat interface they call Pribot that’s designed to answer questions about any privacy policy, intended as a sort of privacy-focused paralegal advisor. Together, the researchers hope those tools can unlock the secrets of how tech firms use your data that have long been hidden in plain sight.”

Personalisation or ‘versioning’ stories for different audiences, or from different inputs, is also made easier by AI technologies — specifically Natural Language Generation (NLG). The most basic NLG tools require journalists to write a skeleton template article with gaps that are filled with either numbers from a connected dataset, or words and phrases from lists (e.g. ‘rose’, ‘fell’, ‘remained stable’) based on whether a condition they describe (e.g. that last year’s number is higher or lower than this year’s) is met. This ensures quality control but “reduce[s] text variability, increasing repetition and the potential to bore readers” (Diakapoulos, 2019: 126).

Generative AI tools can also be prompted to generate multiple versions of a story based on different rows in a dataset, removing the need for a template — but also introducing more scope for error.

More advanced NLG might involve a level of Natural Language Understanding (NLU), selecting and extracting information from source material based on an algorithmically-informed judgement about importance and relevance, or computer vision if the algorithm is expected to generate images or video from visual source material. Both might also be used to invite the user to provide information about themselves which can be used to shape the resulting story.

In a second part of the chapter, published next week, I look at the themes and challenges emerging from the use of AI in investigative journalism projects.

Pingback: AI in investigative journalism: mapping the field (Online Journalism Blog) | ResearchBuzz: Firehose