Previously I wrote on how to use the =importXML formula in Google Docs to pull information from an XML page into a conventional spreadsheet. In this Something For The Weekend post I’ll show how to take that formula further to grab information from webpages – and get updates when that information changes.

Asking questions of a webpage – or find out when the answer changes

Despite its name, the =importXML formula can be used to grab information from HTML pages as well. This post on SEO Gadget, for example, gives a series of examples ranging from grabbing information on Twitter users to price information and web analytics (it also has some further guidance on using these techniques, and is well worth a read for that).

Asking questions of webpages typically requires more advanced use of XPath than I outlined previously – and more trial and error.

This is because, while XML is a language designed to provide structure around data, HTML – used as it is for a much wider range of purposes – isn’t quite so tidy.

Finding the structure

To illustrate how you can use =importXML to grab data from a webpage, I’m going to grab data from Gorkana, a job ads site.

If you look at their journalists jobs page, you’ll see all sorts of information, from navigation and ads to feeds and policies. This is how you could grab a specific piece of data from a page, and put it into a table structure, to answer any questions you might have:

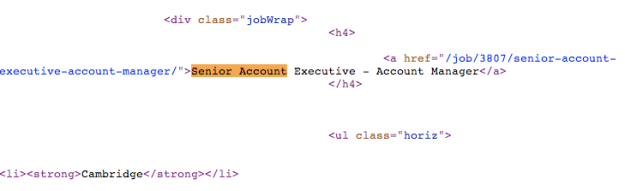

Make a note of the first word or phrase in the section you want (e.g. “Senior account executive”) then right-click on the page and select View Source or whatever option allows you to see the HTML code behind the page.

You could scroll through this to try to find the bit you want, but it’s easier to use your search facility to find that key phrase you noted earlier (e.g. “Senior account executive”)

What you’re hoping to find is some sort of div class tag just above that key phrase – and in this case there’s one called div class=”jobWrap”

This means that the creator of the webpage has added some structure to it, wrapping all their job ads in that div.

We just need to write a formula that is equally specific.

Writing the formula

Open up a spreadsheet in Google Docs and write the following formula in cell B1:

=importXML(“http://www.gorkanajobs.co.uk/jobs/journalist/”, “//div[starts-with(@class, ‘jobWrap’)]“)

When you press Enter you should see 3 columns filled with values from that particular part of the webpage: the job title; the package; and a brief description. Now that you have this data in a structured format you could, for example, work out average wages of job ads, or the most common job titles.

But how did that formula work? As I’ve explained most of =importXML in the previous post, I’ll just explain the query part here. So:

//div

is looking for a tag that begins

[starts-with

is specifying that the this div must begin in a particular way, and it does so by grabbing one thing, and looking for another thing within it:

(@class, ‘jobWrap’)

is saying that the div class should contain ‘jobWrap’ (bonus points: the @ sign indicates an attribute; class is an attribute of the div; ‘jobWrap’ is the value of the attribute)

]

…finishes off that test.

Even if you don’t understand the code itself, you can adapt it to your own purposes as long as you can find the right div class tag and replace ‘jobWrap’ with whatever the value is in your case.

It doesn’t even have to be a div class – you could replace //div with other tags such as //p for each paragraph fitting a particular criteria.

You can also replace @class with another attribute, such as @id or @title. It depends on the HTML of the page you’re trying to grab information from.

Where’s the structure come from?

Why has this data been put into 3 columns? The answer is in the HTML again. You’ll see that the job title is between h4 tags. The location and package is within ul tags and the description is within p tags, before each div is closed with the tag /div

But if you keep reading that HTML you’ll also see that after that /div there is some more information within a different div tag: div class=”adBody”. This contains the name of the recruiter and a link to a page where you can apply. There’s also a third div with a link to an image of the recruiter.

You could adapt your importXML formula to grab these instead – or add a new formula in D2 to add this extra information alongside the others (watch out for mismatches where one div may be missing for some reason).

Finding and cleaning links

You’ll notice that the formula above grabs text, but not the links within each job ad. To do that we need to adapt the formula as follows. Try typing this in cell D2:

=ImportXML(“http://www.gorkanajobs.co.uk/jobs/journalist/”, “//div[starts-with(@class, ‘jobWrap’)]//@href”)

This is identical to the previous formula, with one addition at the end:

//@href

What this does is grab the value of any link in the HTML. In other words, the bit after a href=”

You’ll notice that the results are partial URLs, such as /job/3807/senior-account-executive-account-manager/

These are known as relative URLs, because they are relative to the site they are on, but will not work when placed on another site.

This is easily cleaned up. In cell E2 type the following:

=CONCATENATE(“http://www.gorkanajobs.co.uk”,D2)

This creates a new URL beginning with http://www.gorkanajobs.co.uk and ending with the contents of cell D2 – the relative URL. Copy the formula down the column so it works for all cells in column D.

Not always so tidy

Not all websites will be so structured. The more structured the webpage – or the data within it – the better. But you may have to dig into the HTML and/or tweak your formula to find that structure. Or you may have to settle for some rough and ready data that you clean later.

The key advantage of =importXML, however, is how it is able to pull information from HTML into a table that you can then interrogate, with different columns for different parts of that data.

For more help with these processes you can find explanations of how to write expressions in XPath here but be prepared to use trial and error to get the right expression for the question you’re asking. The Vancouver Data Blog offers some specific examples that can be easily adapted.

Getting updates from your spreadsheet

Finally, this can be useful because Google Docs allows you to receive notifications whenever any changes are made, and to publish your spreadsheet as an RSS feed. This is explained in this blog post, which is also the source of the movie above.

And if you want to see all of this in action I’ve published an example spreadsheet demonstrating all the above techniques here.