For the last few years I’ve been teaching my journalism students a dedicated class on cognitive bias — common ways of thinking that lead journalists (and audiences and sources) to make avoidable mistakes.

Journalism is particularly vulnerable to cognitive bias: we regularly make decisions at speed; we have to deal with too much information — or extract meaning where there isn’t enough of it. Each of those situations makes us vulnerable to poor decision-making — and many of the rules that we adhere to as journalists are designed to address that.

Some cognitive biases — such as groupthink, prejudice, and confirmation bias (covered in a second post here) — are well-known, but many others are not (there are over 180 of them). That includes bias blind spot: the tendency to see how biases affect other people, but not yourself.

So if you were thinking “this doesn’t apply to me”, read on for a guide to some of the cognitive biases likely to affect journalists — from being manipulated by sources to being bad editors of our own copy — and what to do to tackle them.

Why we don’t edit our own copy well: the IKEA effect and the sunk cost fallacy

The IKEA effect is the tendency to place more value on things that you have made yourself. It’s one reason why most people are worse at editing their own work than they are at editing others’.

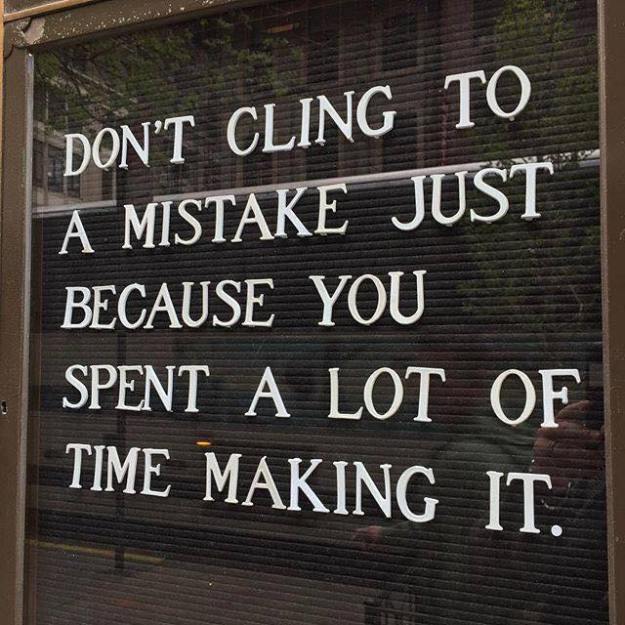

A second reason is the sunk cost fallacy. This is the tendency to continue to pursue a project regardless of its quality — purely because we have already invested enough effort in it.

If you’ve ever insisted on staying in the cinema even when you were not enjoying a film, or finishing a meal that you didn’t like, then you’ve already experienced this.

In journalistic terms the sunk cost fallacy not only shows itself in failing to abandon flawed stories (see also: plan continuation bias) — but perhaps more often in the reluctance to remove material that we have worked hard to obtain.

“That interview took me weeks to organise — I’m not taking that out.”

“That visualisation was really tricky to pull off, we have to use it.”

Traditionally newsrooms address this by having stories edited by someone other than the person who created it. But with an increasing amount of journalism being self-edited (especially on social, where we publish direct to an audience without editors), it’s important to recognise how these cognitive biases can make for poorer reporting.

Not only can we recognise those biases — we can also encourage opposing behaviour.

For example, I encourage my students to take pride in removing large chunks from a piece of work. It’s slightly masochistic, but there is a certain pleasure in chopping whole paragraphs out of a piece you sweated blood over.

Why no one is actually stealing your ideas — and why your ideas aren’t as original as you think

Another cognitive bias to beware of when it comes to journalistic projects is uniqueness bias. This is the tendency to see our projects (and ourselves) as more unique than they actually are.

We can watch out for this in two ways: firstly, the tendency to think that people have “stolen” your idea is often an illusion — it’s quite common for many people to arrive at the same story idea independently.

Secondly, it’s important to spend extra time refining a story idea so that it genuinely does represent a “fresh angle” on an issue. The more specific the better: think about the particular interviews, locations, data and documents you will use.

Remember, however, that no idea is entirely original — an interviewee we haven’t heard from before, a new timescale (bringing an old idea up to date, or taking a historical angle), or a different location (looking at the same issue in a new place) are three simple ways to freshen up a story pitch.

“If it bleeds it leads”: why the news is always negative

It’s a common complaint aimed at journalists that we “only report the bad stuff”. And it’s a common response to explain that good news isn’t generally surprising (i.e. newsworthy) and “when we report it, you read it” (conversely when we report good news, people don’t).

The cognitive bias here is negativity bias — we pay more attention to negative things, and remember them better.

https://twitter.com/AndyBatuchi/status/1240471771482112002

Is that a problem? Well, sometimes yes — it can lead journalists to believe false claims without any supporting evidence.

It can distort public perceptions of dangers (such as crime) so that they do not reflect reality.

And it can lead to potential solutions to those negative things being underreported.

A post by the BBC’s Emily Kasriel describes this very situation very neatly:

“Many years ago, when covering elections in Nigeria, I remember stumbling upon a peaceful voting booth. Deciding that there was nothing worth reporting there, I left in search of a ‘real’ story. Human beings have evolved through many years in risky environments to become vigilant, alert to negative information to help us avoid poisonous berries or marauding lions. We seem programmed to be more likely to spot and remember negative stories.”

The rise of solutions journalism — which Kasriel is responsible for at the BBC — has been a notable attempt to address this cognitive bias embedded into the very fabric of journalism, supported by a growing evidence base that suggests people spend longer reading stories with this approach and even that it’s better for mental health.

This isn’t about ‘reporting good news’ (which audiences don’t read) or human interest stories, but — when done properly — reporting both problems and solutions together. Here’s how to pitch such a story.

It is also about being more creative and critical in how we frame our reporting. Rather than contribute to panic buying by focusing on a purely negative angle, for example, The Texas Tribune reported on it with the headline “Stop buying all the toilet paper. There’s no shortage if everyone takes only what they need“.

Framing: when interviewees spin bad news

While negativity bias leads us to focus too narrowly on the bad things in our stories, the framing effect might lead us to understate them.

The framing effect refers to the technique of presenting options in terms of gain or loss in order to encourage someone to choose (or reject) an option.

A politician, for example, might hail the “70% success rate” of a new policy, when the same rate could equally be described as failing 30% of the time. Police might claim to charge suspects in the majority of cases instead of admitting that one in three crimes are closed without a suspect being identified.

Failing to identify that framing can mean we miss the real story. Being aware of it prepares us to ask better questions and be more sceptical: is that failure rate acceptable? Is that source trying to manipulate me?

Another cognitive bias — the anchoring effect — may be used to make us think less negatively about an action or policy by initially suggesting or enacting more extreme measures.

David Ly highlights Donald Trump’s use of this approach in, for example, announcing “that he will be leaving intact Obama’s order protecting LGBTQ workers rights” or announcing an “extreme immigration ban, followed by a relaxing ‘correction'”, leading to more positive coverage than would have been the case otherwise.

Understanding this, we should be less willing to fall for such tactics, and report on the negative impacts that would have occurred regardless of the anchoring.

Finally, fading effect bias causes people to remember positive things for longer than negative things. Put another way, it may be more important than you realise to include background information about negative events.

Using the wrong picture (and other too-common mistakes): out-group homogeneity bias

It’s become an all-too-regular event: a news organisation apologises after using the wrong clip or captioning an image with the wrong name.

Those wrongly identified are too often members of an ethnic minority, who argue that there is a “tendency of journalists to treat Black, Asian and minority ethnic (BAME) [figures] as part of a homogenous group” because there are too few journalists from those ethnicities in newsrooms.

This tendency has a name: out-group homogeneity bias, the tendency to see groups that we are not a part of (ethnicities, classes, gender, sexuality, disability) as being similar to each other, while being able to see diversity within our own groups.

https://twitter.com/jimwaterson/status/1224657174720282625

Everyone is susceptible to this bias — whatever your race, gender, sexuality, age or other qualities. If you are young, for example, it’s more likely that you will struggle to see how older people differ from each other; if you are female you will see more diversity among women than among men; and so on.

How do we tackle it? An awareness of this tendency should at least make us more likely to check if images and video footage do indeed relate to any person from an ‘out-group’.

But also we should be careful around how this tendency affects our perception of audiences and sources.

One comment piece from The Guardian Australia outlines the problem, for example, in seeking a response from the “Muslim community” on stories:

“There are implications that one single person can actually represent the entire Muslim community. Very often, comment will be sought from people like the Grand Mufti or the Head of the Australian National Imam’s Council (ANIC), as though representation mimics a hierarchy.

“There is no hierarchy in the Muslim community, and there is no peak body or individual who can adequately speak on behalf of the entire community. Our community is made up of a complex web of ethnicities, backgrounds, sects, cultures, generations and political groups, with constant cross-pollination. There are no clear divides between these lines, and no one uniting factor.”

We can address it institutionally through more socially diverse hiring practices, but also individually by making a conscious effort to broaden the range of sources in out-groups that we interact with, and the ways in which we draw on those sources (reactions, leads, expertise), too.

We can also look at examples where mistakes have been made and try to identify what steps could have been taken to prevent it happening again.

This Dispatches investigation into “Traveller crime” (or the related Daily Mail story), for example, can be used to think through how out-group homogeneity bias might have been identified and addressed to avoid some of the weaknesses in the reporting.

Notice, for example, how a wide range of crime categories (youth vandalism, extortion, theft, hare coursing, assault) are treated as homogenous rather than being looked at individually. We can also identify how, when analysing reports of crime near Traveller sites, the reporters make the mistake of assuming that the crimes were perpetrated by Travellers, rather than the possibility that Travellers might be the victims in those reports (or both).

Identifying these generalisations can result in alternative, more concrete, story ideas: an investigation into a specific crime, for example. Or answering the question of who the victims of crime are near Traveller sites. Unpicking the complexity of social issues and how one of those affects different parts of the Traveller community would also introduce depth and complexity that a narrative of homogeneity misses.

The free ebook Everybody In: A Journalist’s Guide To Inclusive Reporting For Journalism Students has some excellent advice on these lines with a series of chapters by different journalists talking about their experiences within different newsrooms.

If you have techniques for dealing with any of these cognitive biases, please let me know. You can read a second post on confirmation bias here.

UPDATE (April 23 2020): I didn’t tackle hindsight bias in this post, but this is also well worth exploring. This BBC piece not only provides a good explanation but also hints at some techniques for spotting it and anticipating it: background research into past statements by interviewees will help you challenge hindsight bias in sources, too.

I began working as a broadcast news reporter/anchor in the 90s. I learned early on to keep myself out of the story until it was time to fact-check. I started interviews with basic knowledge, and then I’d build from the news-makers response.

To that end, One way to combat cognitive biases is to allow the story to unfold. Approach it as if it’s your first encounter with the topic and let the “characters” play their roles.

Also, I was the only black news reporter at my television station, and as you mentioned, although I didn’t speak for all black people, I was a resource for my coworkers. I help them understand what could be considered bigoted, sexist, or racially offensive in a news report.

Pingback: How to prevent confirmation bias affecting your journalism | Online Journalism Blog

Pingback: How to prevent confirmation bias affecting your journalism – BUZZINCLICK

Pingback: How to prevent confirmation bias affecting your journalism – Totally news

Love these messages…. wondering if the content in the graphic is available in any different (desktop friendly) format?

Pingback: How to prevent confirmation bias affecting your journalism – Adhik Post

Pingback: The 7 habits of successful journalists — starting with curiosity | Online Journalism Blog

Pingback: The 7 habits of successful journalists — starting with curiosity – BUZZINCLICK

Pingback: The 7 habits of successful journalists — starting with curiosity – Totally news

Pingback: How Long Should We Hold on to Underperforming Premium Assets in FPL? – FPL Connect

Pingback: Online kenniscentrum – De informatieprofessional