I’m speaking at the Broadcast Journalism Teaching Council‘s summer conference this week about artificial intelligence — specifically generative AI. It’s a deceptively huge area that presents journalism educators with a lot to adapt to in their teaching, so I decided to put those in order of priority.

Each of these priorities could form the basis for part of a class, or a whole module – and you may have a different ranking. But at least you know which one to do first…

Priority 1: Understand how generative AI works

The first challenge in teaching about generative AI is that most people misunderstand what it actually is — so the first priority is to tackle those misunderstandings.

One common misunderstanding is that generative AI tools can be used like search engines: type in a question; get an answer.

But tools like ChatGPT are better seen as storytellers — specifically, as unreliable narrators, with their priority being plausible, rather than true, stories.

This doesn’t mean that the output of generative AI tools should be disregarded, in the same way that some people tell journalists not to use Wikipedia. It just means that it is an opportunity to introduce general journalistic practice in verifying and following up information provided by a source.

A useful analogy here is the film “based on a true story”. You’d want to know which elements of the film were true, and which were added for dramatic effect, before believing it.

Second, generative AI tools are trained. This shapes the stories that they tell — and represents a bias that journalists should be looking for when using any source.

At the most basic level, for example, most algorithms will have been trained on more English language texts than other languages, or Western images, so they know more about information in the English language than in other languages.

Why? Because there is more material available in English than in other languages (90% English in the case of GPT-3).

Once you establish this point you can see similar potential problems: there’s more material available by large corporations than small independent publishers; more material available by men than by women; by white people; and so on. In short, any group which is a minority will be underrepresented in the training data.

So ask it to tell you about Churchill and it will tell you the story most often told when, as a journalist, you want a range of perspectives including critical ones.

Another point to highlight about training data is timeliness: ChatGPT’s training data only goes up until 2021 at the time of writing.

And finally, token limits: there’s a limit to the number of words you can input as part of a query on tools like ChatGPT. This means there are limits to what you can ask, ways of working around that, and alternatives with larger limits.

Priority 2: Know how to attribute the use of ChatGPT

Plagiarism is one of the biggest concerns about ChatGPT and similar tools: its ability to write plausible (if not entirely factual) material that isn’t word-for-word taken from an existing source makes it a tempting option for the potential cheat.

But asking journalism students not to use ChatGPT is like asking students not to have sex: many will have already done it, and they’re going to be much more interested in explanations on how to do it well than in admonitions not to do it at all.

Getting students (or guest speakers) to have a discussion about how they have used generative AI is a useful first step; then we can explain how they can get credit for using ChatGPT.

That means, firstly, building confidence in attributing. Many universities, from Exeter and Newcastle in the UK to NYU and Monash, have already begun publishing guides on their websites on how to attribute the use of tools like ChatGPT.

Secondly, it means encouraging students to critically reflect on their use of generative AI with reference to good practice, and engage with current debates such as bias and diversity (more resources on Journalist’s Toolbox).

(There is a separate discussion to be had about ensuring that assessment design does not rely on merely regurgitating information).

Priority 3: Using ChatGPT for editorial feedback

The biggest application I see for generative AI in education is subbing feedback. With a well-written prompt, tools like ChatGPT can help students to see technical and stylistic mistakes in their work and understand more professional ways to phrase and structure it (Journalist’s Toolbox already has a section dedicated to AI writing and editing tools).

The prompt here is crucial: asking ChatGPT to correct your writing is one thing; asking it to “tell me any mistakes I need to correct in this paragraph, explaining what you’ve changed and why” is where AI comes into its own.

We all know as journalists how much difference it makes when your work is well edited: you learn how to write better. And if you have an editor willing to explain the changes they’re making, you are especially lucky.

ChatGPT is that editor.

It can also edit for different purposes (e.g. optimised for search or social), formats (e.g. news or features) and audiences, helping students to understand the subtleties and considerations involved.

What it does, and how well it does this, will vary — and that’s where critical reflection is crucial. Encouraging students to include evidence of how they have incorporated AI-generated material into their workflow can be a good way of getting them to look at their own work more critically.

One fascinating example of this is ChatGPT being asked to highlight potential bias in the reporter’s own story. Trusting News’s Lynn Walsh tried this and reflected:

“As journalists covering topics like abortion and climate change, we should be aware of how people with differing views on the topics feel about them. I agree. We should be aware and really need to be aware of people’s differing views if we want to build trust.

“How often though, are journalists thinking about those differing views and how people with each of those views would perceive a story they are producing? Unfortunately, I don’t think this happens as often as it needs to.”

What was equally interesting, however, is to debate how ChatGPT has ‘learned’ to spot bias. The results of Trusting News’s experiment suggest a US-centric model of impartiality based on training material that hasn’t perhaps engaged fully with the challenges of ‘He said/she said’ reporting or the concept of “due” impartiality. All of which is useful material to discuss.

Priority 4: Know how to write effective and ethical prompts

As AI becomes a part of the industry, journalists will be expected to have the skills to work with AI tools — and prompt-writing is a skill that has to be learned and practised.

Francesco Marconi’s tips on AI prompt writing for journalists provide a useful starting point here.

What’s useful about these prompts is that it prompts the student journalist, also, to think about things like audience, style, format, and length: a useful habit for any journalistic task, AI-augmented or not.

It’s important to note on the last prompt listed (“request sources”) that asking ChatGPT to provide sources does not guarantee that those sources exist, as it does invent sources. As a result each source must be checked.

Another useful source for prompt inspiration is FlowGPT, a database of prompts that can be searched and used.

Teaching prompt-writing also helps introduce the ethical issues in prompting: the third principle of the Generative AI Diversity Guidelines is to “Build diversity into your prompts“:

“Ask for diverse experts and perspectives. Journalists should explicitly seek, through their prompts, for Generative AI to draw on source material written and/or owned by different demographics. Where this is not possible journalists should use prompts to obtain lists of experts and recognised commentators on specific issues from different backgrounds. Going to the original work of these experts and commentators directly can complement any material created by Generative AI and address possible biases.”

We should also always prompt the tool to list its sources, and to explain what it has done and why.

Priority 5: Idea and source generation

Just as ChatGPT can provide a fresh perspective on our writing, it can also provide different ideas on story angles and sources.

A common mistake made by student journalists, for example, is to come up with ideas to cover an issue, rather than a story. “I’m going to write about homelessness”, for example, is not a good story pitch.

ChatGPT, however, understands that feature stories must have a clear angle, and news articles must have something new happening. So asking it to generate ideas about an issue can help the student journalist to understand what a ‘feature idea’ actually is.

Another useful prompt is to ask what parts of a particular system might be suitable for a journalistic investigation, or what aspects of an issue might be suitable for a news story.

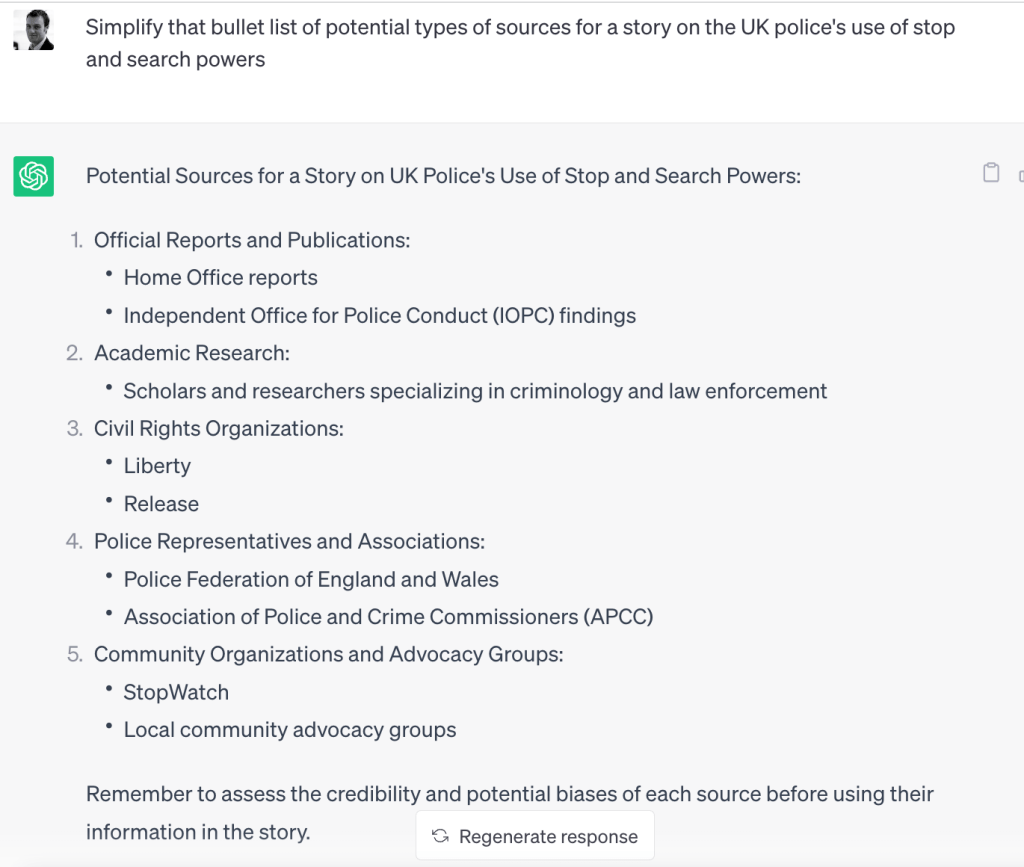

When asked for sources it can suggest types of sources that the student journalist may not have considered, including specific groups.

Prompts should ask for diverse sources that represent a wide range of perspectives on the issue — and the student should be aware that more recent developments are unlikely to be factored into the training data.

Priority 6: Technical support

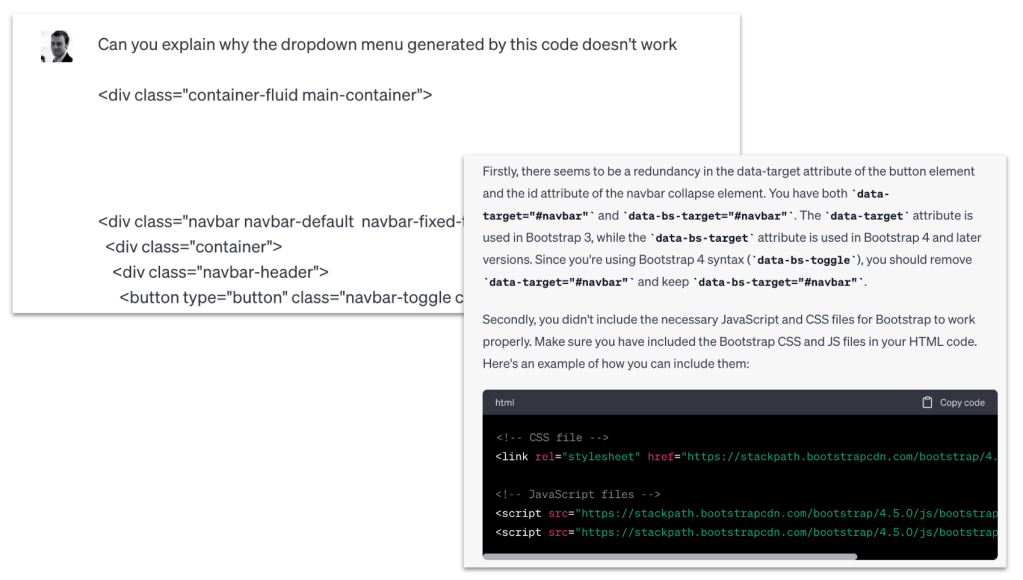

One of the biggest impacts I’ve seen of ChatGPT has been on my students’ coding. ChatGPT is particularly good at generating code, translating code (from one language to another) and even troubleshooting code. It can also help to generate spreadsheet formulae.

It is relatively easy to get ChatGPT to write the initial code for a scraper, which can then be built on, providing more motivation for learning code. It is also possible to ask ChatGPT for help with error codes and other problems.

There is an increasing range of dedicated AI tools, too, from AutoRegex for writing regular expressions and AI2sql for SQL queries, to Sheeter for spreadsheet formulae. And Journalist’s Toolbox has a section dedicated to other AI-driven coding tools.

Priority 7: Background briefings

One of the most common ways that people use generative AI is as a form of search engine, providing background information on a topic they need to get to grips with. This is problematic for reasons already covered above — but can still be useful if the prompts are well written.

For example, ChatGPT can be used to explain different parts of a complex system in order to get a quick overview of it that you can then dig into further.

Generative AI tools can also be used to summarise lengthy documents and reports. Again, this should be a starting point that can be used to identify particular sections or concepts to focus on, rather than treated as a 100% accurate representation.

Priority 8: Filtering and prioritising

AI’s ability for filtering and prioritising information has obvious potential for journalists. The Generative AI for News Media Colab notebook has, among other things, code that allows you to extract newsworthy information from press releases or scientific papers.

You can also input the abstracts of scientific papers or datasets and get it to rank them based on a particular audience and criteria (e.g. newsworthiness). The results are up for discussion.

Priority 9: Automation of writing

Finally, at the bottom of my priorities, is getting AI tools to automate the writing process. Some obvious applications include inputting a dataset and asking it to generate a story for each row, or inputting a transcript and asking it to write it up as an interview feature, or simply asking it to write an explainer based on public information.

In all these cases, again, it’s important to remember the potential for AI to hallucinate and make things up that aren’t in your data or transcript. You will have to identify every fact and check it.

You’ll probably — if my experiments are anything to go by — also have to edit out some horrible overwriting and overly gushing passages that sound more like marketing than journalism (again, probably a sign of the blurred boundary between the two in the training materials).

The process may be best, then, for giving you ideas for approaches you might not have considered, rather than generating anything usable. But again, it’s vital that students document the process and are transparent about the process.

Other uses of generative AI

This list doesn’t even touch on many other uses of generative AI, including story illustration (some ethical issues to discuss here), video editing — I’d welcome any ideas or examples on introducing these and other aspects to students.

Very interesting

Hi Paul,

Great post. Really helpful to see how you are tackling it t be able to compare and contrast with what we’re doing at Cardiff.

Thanks,

Gavin