Image by Lauren York

Data is so central to the decision-making that shapes our countries, jobs and even personal lives that an increasing amount of data journalism involves scrutinising the problems with the very data itself. Here’s an illustrative list of when bad data becomes the story – and the lessons they can teach data journalists:

Deaths in police custody unrecorded

This investigation by the Bureau of Investigative Journalism demonstrates an important question to ask about data: who decides what gets recorded?

In this case, the BIJ identified “a number of cases not included in the official tally of 16 ‘restraint-related’ deaths in the decade to 2009 … Some cases were not included because the person has not been officially arrested or detained.”

As they explain:

“It turns out the IPCC has a very tight definition of ‘in custody’ – defined only as when someone has been formally arrested or detained under the mental health act. This does not include people who have died after being in contact with the police.

“There are in fact two lists. The one which includes the widely quoted list of sixteen deaths in custody only records the cases where the person has been arrested or detained under the mental health act. So, an individual who comes into contact with the police – is never arrested or detained – but nonetheless dies after being restrained, is not included in the figures.

“… But even using the IPCC’s tightly drawn definition, the Bureau has identified cases that are still missing.”

Cross-checking the official statistics against wider reports was key technique. As was using the Freedom of Information Act to request the details behind them and the details of those “ who died in circumstances where restraint was used but was not necessarily a direct cause of death”.

Cooking the books on drug-related murders

Drug related murders in Mexico

Cross-checking statistics against reports was also used in this investigation by Diego Valle-Jones into Mexican drug deaths:

“The Acteal massacre committed by paramilitary units with government backing against 45 Tzotzil Indians is missing from the vital statistics database. According to the INEGI there were only 2 deaths during December 1997 in the municipality of Chenalho, where the massacre occurred. What a silly way to avoid recording homicides! Now it is just a question of which data is less corrupt.”

Diego also used the Benford’s Law technique to identify potentially fraudulent data, which was also used to highlight relationships between dodgy company data and real world events such as the dotcom bubble and deregulation.

Poor records mean no checks

Detective Inspector Philip Shakesheff exposed a “gap between [local authority] records and police data”, reported The Sunday Times in a story headlined ‘Care home loses child 130 times‘:

“The true scale of the problem was revealed after a check of records on police computers. For every child officially recorded by local authorities as missing in 2010, another seven were unaccounted for without their absence being noted.”

Why is it important?

“The number who go missing is one of the indicators on which Ofsted judges how well children’s homes are performing and the homes have a legal duty to keep accurate records.

“However, there is evidence some homes are failing to do so. In one case, Ofsted gave a good report to a private children’s home in Worcestershire when police records showed 1,630 missing person reports in five years. Police stationed an officer at the home and pressed Ofsted to look closer. The home was downgraded to inadequate and it later closed.

“The risks of being missing from care are demonstrated by Zoe Thomsett, 17, who was Westminster council’s responsibility. It sent her to a care home in Herefordshire, where she went missing several times, the final time for three days. She had earlier been found at an address in Hereford, but because no record was kept, nobody checked the address. She died there of a drugs overdose.

“The troubled life of Dane Edgar, 14, ended with a drugs overdose at a friend’s house after he repeatedly went missing from a children’s home in Northumberland. Another 14-year-old, James Jordan, was killed when he absconded from care and was the passenger in a stolen car.”

Interests not registered

When there are no formal checks on declarations of interest, how can we rely on it? In Chile, the Ciudadano Inteligente Fundaciondecided to check the Chilean MPs’ register of assets and interests by building a database:

“No-one was analysing this data, so it was incomplete,” explained Felipe Heusser, executive president of the Fundacion. “We used technology to build a database, using a wide range of open data and mapped all the MPs’ interests. From that, we found that nearly 40% of MPs were not disclosing their assets fully.”

The organisation has now launched a database that “enables members of the public to find potential conflicts of interest by analysing the data disclosed through the members’ register of assets.”

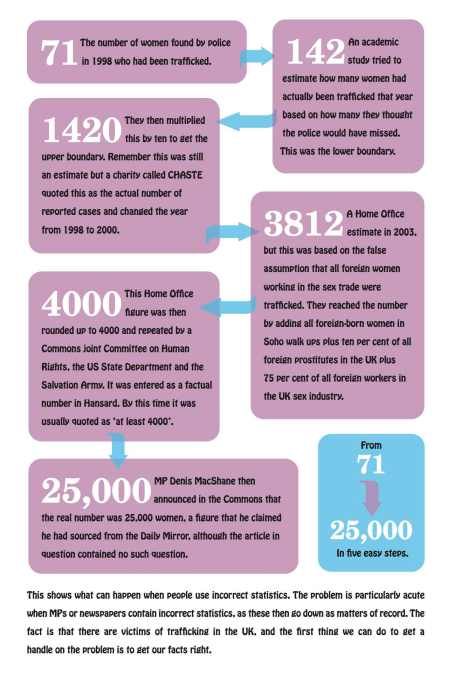

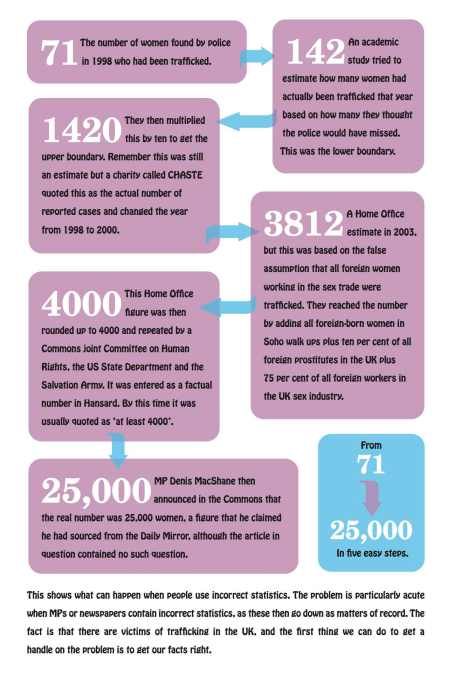

Data laundering

Tony Hirst’s post about how dodgy data was “laundered” by Facebook in a consultants report is a good illustration of the need to ‘follow the data’.

We have some dodgy evidence, about which we’re biased, so we give it to an “independent” consultant who re-reports it, albeit with caveats, that we can then report, minus the caveats. Lovely, clean evidence. Our lobbyists can then go to a lazy policy researcher and take this scrubbed evidence, referencing it as finding in the Deloitte report, so that it can make its way into a policy briefing.”

“Things just don’t add up”

In the video below Ellen Miller of the Sunlight Foundation takes the US government to task over the inconsistencies in its transparency agenda, and the flawed data published on its USAspending.gov – so flawed that they launched the Clearspending website to automate and highlight the discrepancy between two sources of the same data:

Key budget decisions made on useless data

Sometimes data might appear to tell an astonishing story, but this turns out to be a mistake – and that mistake itself leads you to something much more newsworthy, as Channel 4′s FactCheck foundwhen it started trying to find out if councils had been cutting spending on Sure Start children’s centres:

“That ought to be fairly straightforward, as all councils by law have to fill in something called a Section 251 workbook detailing how much they are spending on various services for young people.

“… Brent Council in north London appeared to have slashed its funding by nearly 90 per cent, something that seemed strange, as we hadn’t heard an outcry from local parents.

“The council swiftly admitted making an accounting error – to the tune of a staggering £6m.”

And they weren’t the only ones. In fact, the Department for Education admitted the numbers were “not very accurate”:

“So to recap, these spending figures don’t actually reflect the real amount of money spent; figures from different councils are not comparable with each other; spending in one year can’t be compared usefully with other years; and the government doesn’t propose to audit the figures or correct them when they’re wrong.”

This was particularly important because the S251 form “is the document the government uses to reallocate funding from council-run schools to its flagship academies.”:

“The Local Government Association (LGA) says less than £250m should be swiped from council budgets and given to academies, while the government wants to cut more than £1bn, prompting accusations that it is overfunding its favoured schools to the detriment of thousands of other children.

“Many councils’ complaints, made plain in responses to an ongoing government consultation, hinge on DfE’s use of S251, a document it has variously described as “unaudited”, “flawed” and”not fit for purpose”.

No data is still a story

Sticking with education, the TES reports on the outcome of an FOI request on the experience of Ofsted inspectors:

“[Stephen] Ball submitted a Freedom of Information request, asking how many HMIs had experience of being a secondary head, and how many of those had led an outstanding school. The answer? Ofsted “does not hold the details”.

““Secondary heads and academy principals need to be reassured that their work is judged by people who understand its complexity,” Mr Ball said. “Training as a good head of department or a primary school leader on the framework is no longer adequate. Secondary heads don’t fear judgement, but they expect to be judged by people who have experience as well as a theoretical training. After all, a working knowledge of the highway code doesn’t qualify you to become a driving examiner.”

“… Sir Michael Wilshaw, Ofsted’s new chief inspector, has already argued publicly that raw data are a key factor in assessing a school’s performance. By not providing the facts to back up its boasts about the expertise of its inspectors, many heads will remain sceptical of the watchdog’s claims.”

Men aren’t as tall as they say they are

To round off, here’s a quirky piece of data journalism by dating site OkCupid, which looked at the height of its members and found an interesting pattern:

Male height distribution on OKCupid

“The male heights on OkCupid very nearly follow the expected normal distribution—except the whole thing is shifted to the right of where it should be.

“Almost universally guys like to add a couple inches. You can also see a more subtle vanity at work: starting at roughly 5′ 8″, the top of the dotted curve tilts even further rightward. This means that guys as they get closer to six feet round up a bit more than usual, stretching for that coveted psychological benchmark.”

Do you know of any other examples of bad data forming the basis of a story? Please post a comment – I’m collecting examples.

UPDATE (April 20 2012): A useful addition from Simon Rogers: Named and shamed: the worst government annual reports explains why government department spending reports fail to support the Government’s claimed desire for an “army of armchair auditors”, with a list of the worst offenders at the end.

Also: