It’s an often encountered situation, but one that can be a pain to address – merging data from two sources around a common column. Here’s a way of doing it in Google Refine…

Here are a couple of example datasets to import into separate Google Refine projects if you want to play along, both courtesy of the Guardian data blog (pulled through the Google Spreadsheets to Yahoo pipes proxy mentioned here):

– University fees data (CSV via pipes proxy)

– University HESA stats, 2010 (CSV via pipes proxy)

We can now merge data from the two projects by creating a new column from values an existing column within one project that are used to index into a similar column in the other project. Looking at the two datasets, both HESA Code and institution/University look like candidates for merging the data. Which should we go with? I’d go with the unique identifier (i.e. HESA code in the case) every time…

First, create a new column:

Now do the merge, using the cell.cross GREL (Google Refine Expression Language) command. Trivially, and pinching wholesale from the documentation example, we might use the following command to bring in Average Teaching Score data from the second project into the first:

cell.cross("Merge Test B", "HESA code").cells["Average Teaching Score"].value[0]

Note that there is a null entry and an error entry. It’s possible to add a bit of logic to tidy things up a little:

if (value!='null',cell.cross("Merge Test B", "HESA code").cells["Average Teaching Score"].value[0],'')

Here’s the result:

Coping with not quite matching key columns

Another situation that often arises is that you have two columns that almost but don’t quite match. For example, this dataset has a different name representation that the above datasets (Merge Test C):

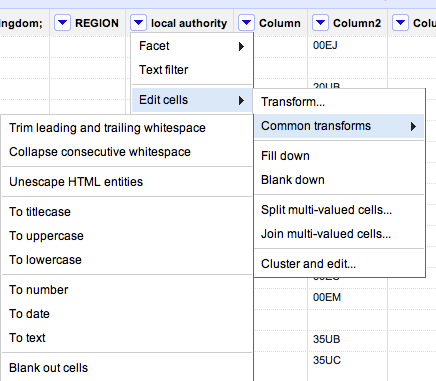

There are several text processing tools that we can use to try to help us match columns that differ in well-structured ways:

In the above case, where am I creating a new column based on the contents of the Institution column in Merge Test C, I’m using a couple of string processing tricks… The GREL expression may look complicated, but if you build it up in a stepwise fashion it makes more sense.

For example, the command replace(value,"this", "that") will replace occurrences of “this” in the string defined by value with “that”. If we replace “this” with an empty string (” (two single quotes next to each other) or “” (two double quotes next to each other)), we delete it from value: replace(value,"this", "")

The result of this operation can be embedded in another replace statement: replace(replace(value,"this", "that"),"that","the other"). In this case, the first replace will replace occurrences of “this” with “that”; the result of this operation is passed to the second (outer) replace function, which replaces “that” with “the other”). Try building up the expression in realtime, and see what happens. First use:

toLowercase(value)

(what happens?); then:

replace(toLowercase(value),'the','')

and then:

replace(replace(toLowercase(value),'the',''),'of','')

The fingerprint() function then separates out the individual words that are left, orders them, and returns the result (more detail). Can you see how this might be used to transform a column that originally contains “The University of Aberdeen” to “aberdeen university”, which might be a key in another project dataset?

When trying to reconcile data across two different datasets, you may find you need to try to minimise the distance between almost common key columns by creating new columns in each dataset using the above sorts of technique.

Be careful not to create false positive matches though; and also be mindful that not everything will necessarily match up (you may get empty cells when using cell.cross; (to mitigate this, filter rows using a crossed column to find ones where there was no match and see if you can correct them by hand). Even if you don’t completely successful cross data from one project to another, you might manage to automate the crossing of most of the rows, minimising the amount of hand crafted copying you might have to do to tidy up the real odds and ends…

So for example, here’s what I ended up using to create a “Pure key” column in Merge Test C:

fingerprint(replace(replace(replace(toLowercase(value),'the',''),'of',''),'university',''))

And in Merge Test A I create a “Complementary Key” column from the University column using fingerprint(value)

From the Complementary Key column in Merge Test A we call out to Merge Test C: cell.cross("Merge Test C", "Pure key").cells["UCAS ID"].value[0]

Obviously, this approach is far from ideal (and there may be more “correct” and/or efficient ways of doing this!) and the process described above is admittedly rather clunky, but it does start to reveal some of what’s involved in trying to bring data across to one Google Refine project from another using columns that don’t quite match in the original dataset, although they do (nominally) refer to the same thing, and does provide a useful introductory exercise to some of the really quite powerful text processing commands in Google Refine …

![]()