Last year I was part of a team — with Yemisi Akinbobola and Ogechi Ekeanyawu — that won a CNN MultiChoice African Journalist of the Year award for an investigation into Nigerian football agents. The project, funded by Journalismfund.eu, and also available in an immersive longform version, combined data journalism and networked production with on-the-ground reporting. Here are some of the lessons we drew from the project… Continue reading

Data journalism’s AI opportunity: the 3 different types of machine learning & how they have already been used

This week I’m rounding off the first semester of classes on the new MA in Data Journalism with a session on artificial intelligence (AI) and machine learning. Machine learning is a subset of AI — and an area which holds enormous potential for journalism, both as a tool and as a subject for journalistic scrutiny.

So I thought I would share part of the class here, showing some examples of how the 3 types of machine learning — supervised, unsupervised, and reinforcement — have already been used for journalistic purposes, and using those to explain what those are along the way. Continue reading

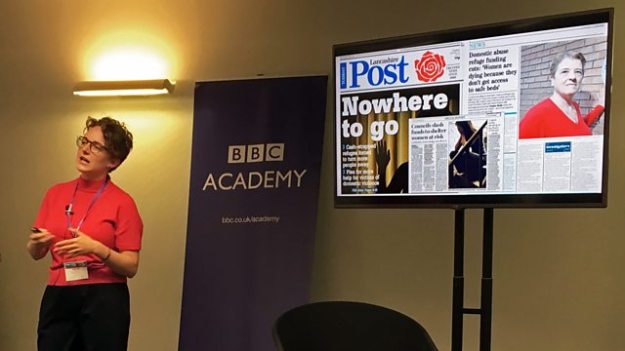

Local journalism is getting more data-driven — and other thoughts on Data Journalism UK 2017

Megan Lucero of the Bureau Local – photo: Jonny Jacobsen

Last week I hosted the second annual Data Journalism UK conference — a convenient excuse to bring together speakers from the news media, industry experts, charities and startups working in the field. You can read write ups on Journalism.co.ukand the BBC Academy website (who kindly hosted the event at BBC Birmingham), but I thought I’d also put my own thoughts down here…

The Bureau and the BBC: 2 networked models for supporting data journalism

2017 saw the launch of two projects with a remit to generate and stimulate data journalism at a local level: the Bureau of Investigative Journalism’s Bureau Local project, and the BBC’s Shared Data Unit. Continue reading

Here are all the presentations from Data Journalism UK 2017

Megan Lucero at Data Journalism UK 2017. Photo by Jonny Jacobsen

Last week I had the pleasure of hosting the second annual Data Journalism UK conference in Birmingham.

The event featured speakers from the regional press, hyperlocal publishers, web startups, nonprofits, and national broadcasters in the UK and Ireland, with talks covering investigative journalism, automated factchecking, robot journalism, the Internet of Things, and networked, collaborative data journalism. You can read a report on the conference at Journalism.co.uk. Continue reading

Announcing a part time PGCert in Data Journalism

Earlier this year I announced a new MA in Data Journalism. Now I am announcing a version of the course for those who wish to study a shorter, part time version of the course.

Earlier this year I announced a new MA in Data Journalism. Now I am announcing a version of the course for those who wish to study a shorter, part time version of the course.

The PGCert in Data Journalism takes place over 8 months and includes 3 modules from the full MA:

- Data Journalism;

- Law, Regulation and Institutions (including security); and

- Specialist Journalism, Investigations and Coding

The modules develop both a broad understanding of a range of data journalism techniques before you choose to develop some of those in greater depth on a specialist project.

The course is designed for those working in industry who wish to gain accredited skills in data journalism, but who cannot take time out to study full time or may not want a full Masters degree (a PGCert is 60 credits towards the 180 credits needed for a full MA).

Students on the PGCert can also apply to work with partner organisations including The Telegraph, Trinity Mirror and Haymarket brands including FourFourTwo.

More details are on the course webpage. If you want to talk about the PGCert you can contact me on Twitter @paulbradshaw or on email paul.bradshaw@bcu.ac.uk.

How to: get started with SQL in Carto and create filtered maps

Today I will be introducing my MA Data Journalism students to SQL (Structured Query Language), a language used widely in data journalism to query databases, datasets and APIs.

I’ll be partly using the mapping tool Carto as a way to get started with SQL, and thought I would share my tutorial here (especially as since its recent redesign the SQL tool is no longer easy to find).

So, here’s how you can get started using SQL in Carto — and where to find that pesky SQL option. Continue reading

Information is Beautiful Awards 2017: “Visualisation without story is nothing”

MA Data Journalism students Carmen Aguilar Garcia and Victoria Oliveres attended the Information is Beautiful awards this week and spoke to some of the nominees and winners. In a guest post for OJB they give a rundown of the highlights, plus insights from data visualisation pioneers Nadieh Bremer, Duncan Clark and Alessandro Zotta.

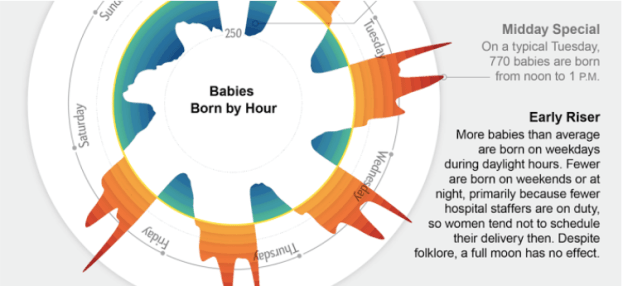

Nadieh Bremer was one of the major winners at this year’s Information is Beautiful Awards 2017 — winning in both the Science & Technology and Unusual categories for Why Are so Many Babies Born around 8:00 A.M.? (with Zan Armstrong and Jennifer Christiansen) and Data Sketches in Twelve Installments (with Shirley Wu).

Silver, Science & technology category – Why Are so Many Babies Born around 8:00 A.M.? by Nadieh Bremer, Zan Armstrong & Jennifer Christiansen. The prize was shared with Zan Armstrong, Scientific American.

Bremer graduated as an Astronomer in 2011, but a couple of years working as an Analytic Consultant were enough for her to understand that her passion was data visualisation. For the past year she has been exploring this world by herself. Continue reading

How one Norwegian data team keeps track of their data journalism projects

In a special guest post Anders Eriksen from the #bord4 editorial development and data journalism team at Norwegian news website Bergens Tidende talks about how they manage large data projects.

Do you really know how you ended up with those results after analyzing the data from Public Source?

Well, often we did not. This is what we knew:

- We had downloaded some data in Excel format.

- We did some magic cleaning of the data in Excel.

- We did some manual alterations of wrong or wrongly formatted data.

- We sorted, grouped, pivoted, and eureka! We had a story!

Then we got a new and updated batch of the same data. Or the editor wanted to check how we ended up with those numbers, that story.

…And so the problems start to appear.

How could we do the exact same analysis over and over again on different batches of data?

And how could we explain to curious readers and editors exactly how we ended up with those numbers or that graph?

We needed a way to structure our data analysis and make it traceable, reusable and documented. This post will show you how. We will not teach you how to code, but maybe inspire you to learn that in the process. Continue reading

I’m delivering a 3 day workshop on scraping for journalists in January

From January 23-25 I’ll be delivering a 3 day workshop on scraping in London at The Centre for Investigative Journalism. You don’t need any knowledge of scraping (automatically collecting information from multiple webpages or documents) or programming to take part.

Scraping has been used to report stories ranging from hard news items like “Half of GP surgeries open for under eight hours a day” to lighter stories in arts and culture such as “Movie Trilogies Get Worse with Each Film. Book Trilogies Get Better“.

By the end of the workshop you will be able to use scraping tools (without programming) and have the basis of the skills needed to write your own, more advanced and powerful, scrapers. You will also be able to communicate with programmers on relevant projects and think about editorial ideas for using scrapers.

A full outline of the course can be found on the Centre for Investigative Journalism website, where bookings can also be made, including discounts for freelancers and students.

“Data matters — but people are still the best sources of stories” — insights from investigative journalist Peter Geoghegan

In a guest post Jane Haynes speaks to investigative journalist Peter Geoghegan of the award-winning news site The Ferret about data, contacts and “nosing up the trousers of power”.

When the Scottish Government announced last month that it was banning fracking, it was a moment to savour for a group of journalists from an independent news site in the heart of the country.

The team from investigative cooperative The Ferret had been the first news organisation to reveal plans by nine energy companies to bid for licences to extract shale gas from central Scotland.

Using a combination of contact-led information and FOI requests, they uncovered the extent of the ambitions to dig deep into Scottish soil.

It was part of a steady flow of fracking stories from the Ferret team, ensuring those involved in making decisions were in no doubt of their responsibilities and recognised that every step would be scrutinised. Continue reading